Bag of Words Simplified: A Hands-On Guide with Code, Advantages, and Limitations in NLP

How do we make machines understand text? One of the simplest yet most widely-used techniques is the Bag of Words (BoW) model. This approach converts text into a format that can be processed by machine learning algorithms by representing words as features. But while it’s easy to use, BoW also has its limitations. Let’s explore the advantages, disadvantages, and examples of Bag of Words to understand how and when to use it effectively.

What is Bag of Words (BoW)?

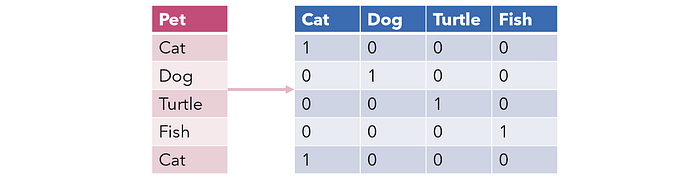

Bag of Words is a method that represents text data by the frequency of words in a document, ignoring grammar and word order. Each document becomes a “bag” of words where the importance is placed on the count of each word.

Advantages of Bag of Words:

Simplicity:

BoW is incredibly easy to understand and implement. It requires minimal processing and can be used as a baseline method in Natural Language Processing (NLP).

Example

from sklearn.feature_extraction.text import CountVectorizer

corpus = ['I love programming', 'Programming is fun', 'I love machine learning']

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(corpus)

print(X.toarray())Output

[[1 1 0 1 1]

[1 0 1 0 1]

[1 1 0 0 1]]Here, the BoW matrix represents the frequency of words in each sentence.

Good for Keyword Detection

Since BoW focuses on the frequency of words, it’s effective for tasks where the presence or absence of specific words matters, such as spam detection or topic classification.

Example: In a spam detection system, BoW can identify frequent words like “win” or “prize,” which are common in spam emails.

Works Well for Small Text

When working with short, simple text data (like tweets or product reviews), BoW offers a quick and efficient solution without the need for more complex preprocessing.

Disadvantages of Bag of Words:

Ignores Word Context

BoW doesn’t take into account the order of words or their relationships, so it can miss out on the meaning. For instance, it treats “not good” and “good not” the same.

Example: In a sentiment analysis model, “I am not happy” and “I am happy” would appear almost identical in a BoW representation, though their meanings differ greatly.

High Dimensionality

As with One-Hot Encoding, BoW can lead to high-dimensional data when working with large corpora, especially if you have many unique words.

Example: For a dataset with 10,000 unique words, each document is represented by a 10,000-dimensional vector, which leads to sparse matrices and increased computational cost.

Sensitive to Stopwords

Common words like “the,” “is,” or “and” can dominate the results if not removed, overshadowing more meaningful terms. Removing these words (stopwords) is often necessary to improve model performance.

Example: If you don’t filter stopwords, phrases like “the dog barks” and “a dog barks” will be represented similarly, though the words “the” and “a” provide little value.

Practical Examples of Bag of Words:

- Basic Example

from sklearn.feature_extraction.text import CountVectorizer

corpus = ['Apple is red', 'Banana is yellow', 'Grapes are green']

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(corpus)

print(X.toarray())[[1 0 1 0 1]

[0 1 1 0 1]

[0 0 1 1 1]]- Text Classification

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

corpus = ['Spam offer for you', 'Meeting at 3 PM', 'Free prize', 'Project deadline extended']

labels = [1, 0, 1, 0] # 1 = Spam, 0 = Not Spam

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(corpus)

clf = MultinomialNB()

clf.fit(X, labels)Here, BoW helps classify spam and non-spam emails by word frequency.

- Stopwords Filtering:

vectorizer = CountVectorizer(stop_words='english')

X = vectorizer.fit_transform(corpus)

print(X.toarray())This removes common stopwords, leaving only meaningful words in the text.

When to Use Bag of Words:

- Text classification with small vocabularies: For tasks like spam detection or sentiment analysis with small datasets, BoW can be a great starting point.

- Feature extraction for simple models: In models like Naive Bayes or Logistic Regression, Bag of Words can be effective for text data.

When to Avoid Bag of Words:

- Large corpora with diverse vocabularies: When dealing with large text datasets, the dimensionality increases drastically. Consider using more efficient methods like TF-IDF or Word2Vec.

- Tasks requiring context: For machine translation, question answering, or chatbot creation, Bag of Words fails to capture word order and context. Use embeddings or sequence-based models instead.

Conclusion: Bag of Words is a simple yet effective method for turning text into numbers, especially for basic NLP tasks. However, it’s important to understand its limitations, particularly with regard to losing context and creating high-dimensional data. Knowing when to use BoW — and when to explore more advanced alternatives — will help you build better text-based models.