Communicating effectively is the most important of all life skills. It enables us to transfer information to the other people and to understand what is said to us. Some examples includes communicating new ideas ,expressing your feelings vocally ( example voice) ,written (example printed articles, emails,sites ), visually (example logos,charts or graphs ) or non-verbally (example body language or gestures).

When it comes to computer it’s designed to understand it’s native language or in other words what we call it machine language or machine code which is incomprehensible to many people . Communication between Humans and computer is not in words but with millions of zeros and one which we call it as binary numbers.

Few decades back programmers used punch cards to communicate with computers and recently layman are using multiple devices like Alexa,Siri to communicate and to get there work done.

Let‘s take a closer look how this devices are now able to communicate with humans and how it has been made possible.

Natural Language Processing (NLP)

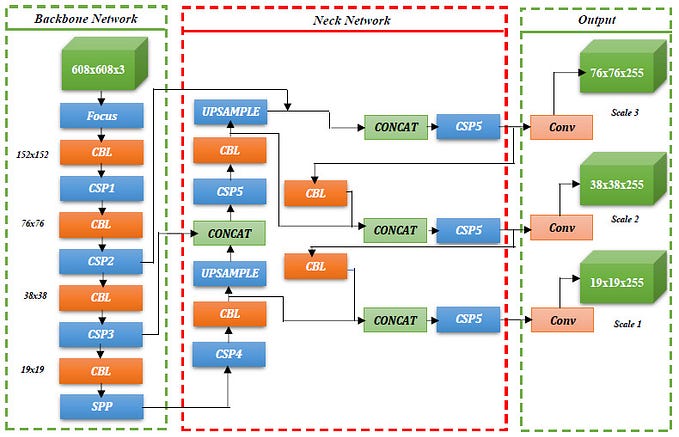

Natural Language Processing or NLP is a sub field of Linguistics, Computer Science, Information Technology and Artificial Intelligence that gives the machines the ability to understand, read, speak and derive meaning from human languages.

Few Examples where we use NLP daily

Autocomplete

Spell Check

Voice Text messaging

Spam Filters

Related keywords on search engines

Siri,Alexa or Google Assistant

Text Preprocessing

It’s a process which simply means to bring your text into form that is predictable and analyzable to build models

Steps of preprocessing

Tokenization

Stopword Removal

N-Grams

Stemming

Word Sense Disambiguation

Count Vectorizer

TF-IDF (TFIDF Vectorizer)

Hashing Vectorizer

TOKENIZATION : Task of breaking a text into pieces is called Token

There are two type of Tokenization

Word Tokenization : Sentence is broken into multiple words

Sentence Tokenization: It’s a process of splitting text into multiple sentences

Stopword Removal: Stopwords are the words which does not add much meaning to a sentence . They can be safely ignored without sacrificing the meaning of the sentence.

N-Grams: Is a contiguous sequence of n items from a given sample of text or speech. Are extensively used in Text Mining and natural language processing tasks.

Stemming: It’s the process of reducing a word to its word stem that affixes to suffixes and prefixes or it’s a process of reducing inflected words to there word stem

Word Sense Disambiguation: It identifies which sense of a word (i.e meaning ) is used in a sentence , when the word has multiple meanings.

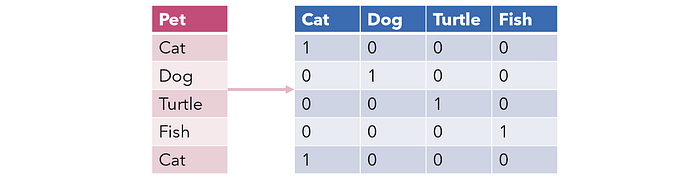

Count Vectorizer: It tokenizes the text along with performing very basic preprocessing and count for the number of times each word appeared in documents

TF-IDF (TFIDF Vectorizer): It stands for “term frequency-inverse document frequency” .

It’s a word frequency scores that try to highlights words that are more interesting . The importance is in scale of 0 and 1

Term Frequency (TF): This summarizes how often a given word appears in a document.

Sent 1 : Good Boy

Sent 2 : Good Girl

Sent 3: Boy Girl Good

TF = ( Number of repeated words in a SENTENCE ) / (Number of words in a SENTENCE)

Inverse Document Frequency (IDF): This down scales words that appears a lot within a document

IDF = LOG ( Number of sentences / Number of sentences containing words )

TF * IDF

Hashing Vectorizer:Convert a collection of text documents to a matrix of token occurrences. This text vectorizer implementation uses the hashing trick to find the token string name to feature integer index mapping.

Thank you for viewing my First Post. Please provide your suggestion and feedback . I would surely try to update current post and it would Encourage me to share few more posts